Streamline Your API Interactions with Ease

Streamlining Data Across Diverse Architectures

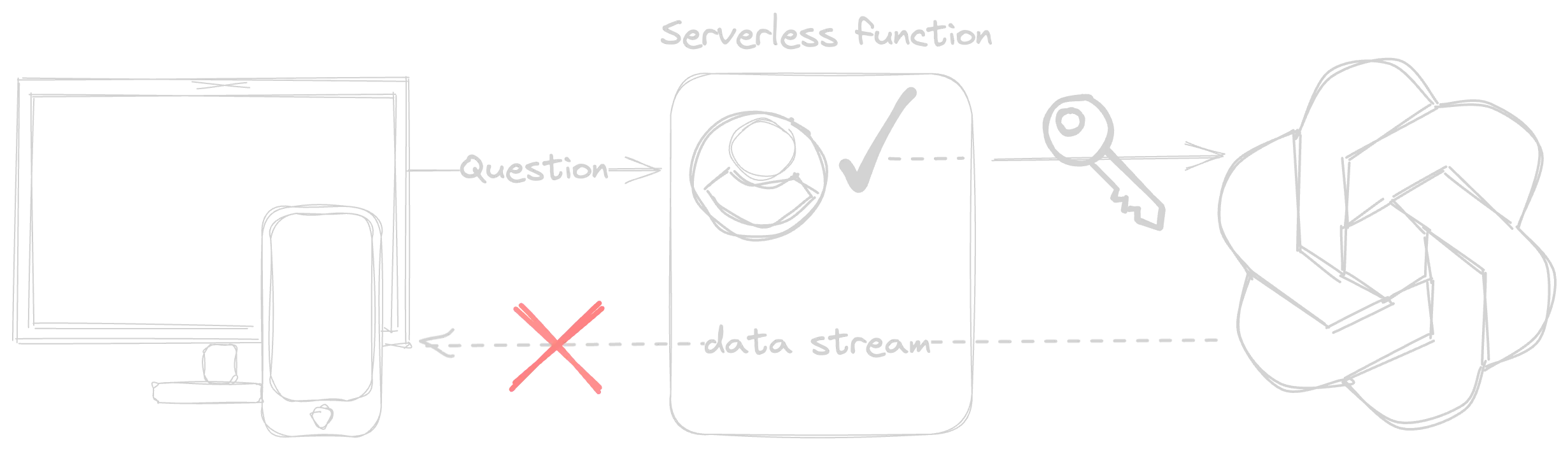

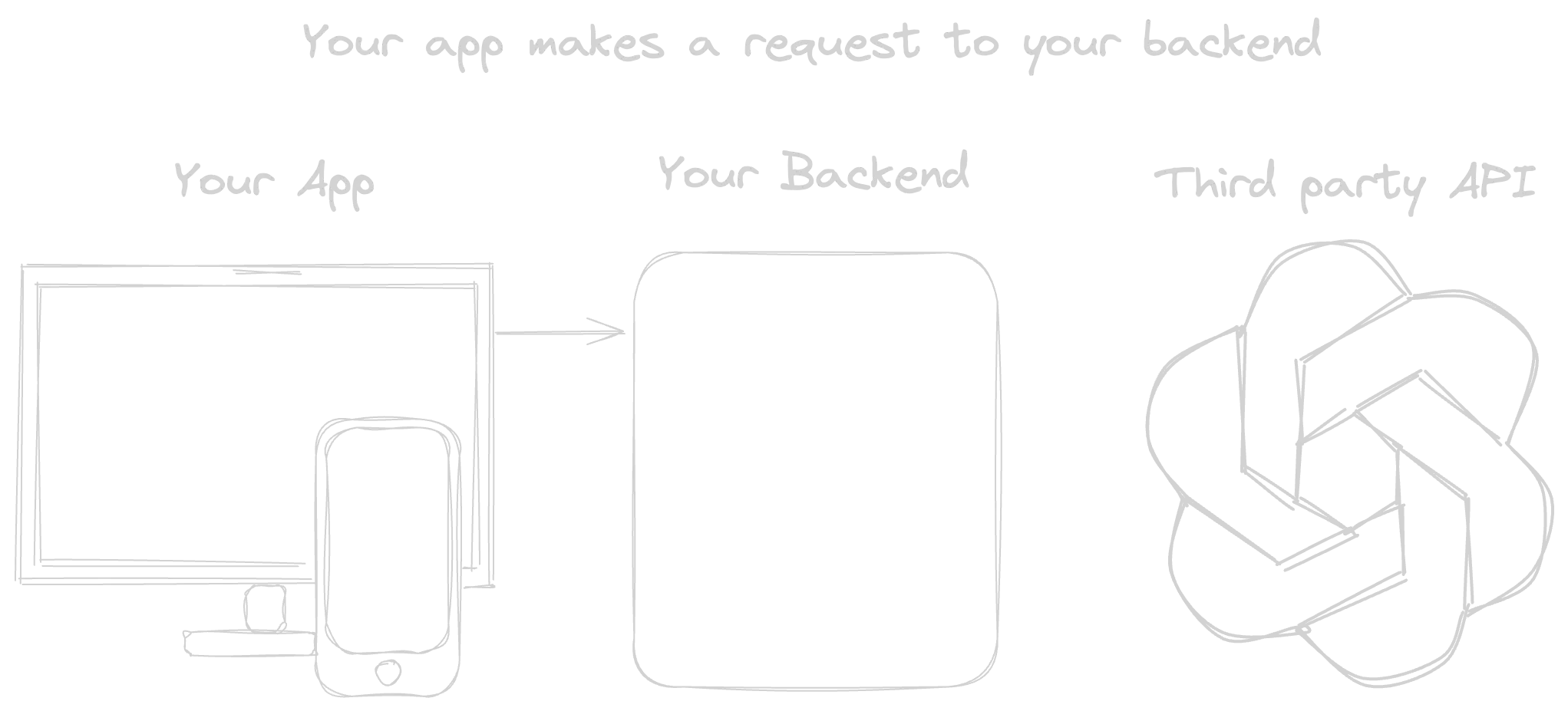

- Traditional and serverless architectures often struggle with efficiently streaming data from/to APIs. This limitation impacts real-time data delivery and can lead to increased costs and complexity.

- In scenarios like interacting with APIs such as OpenAI, the challenge intensifies. The delay in processing and streaming large volumes of data can degrade user experience and efficiency.

Optimize Data Streaming with Signway

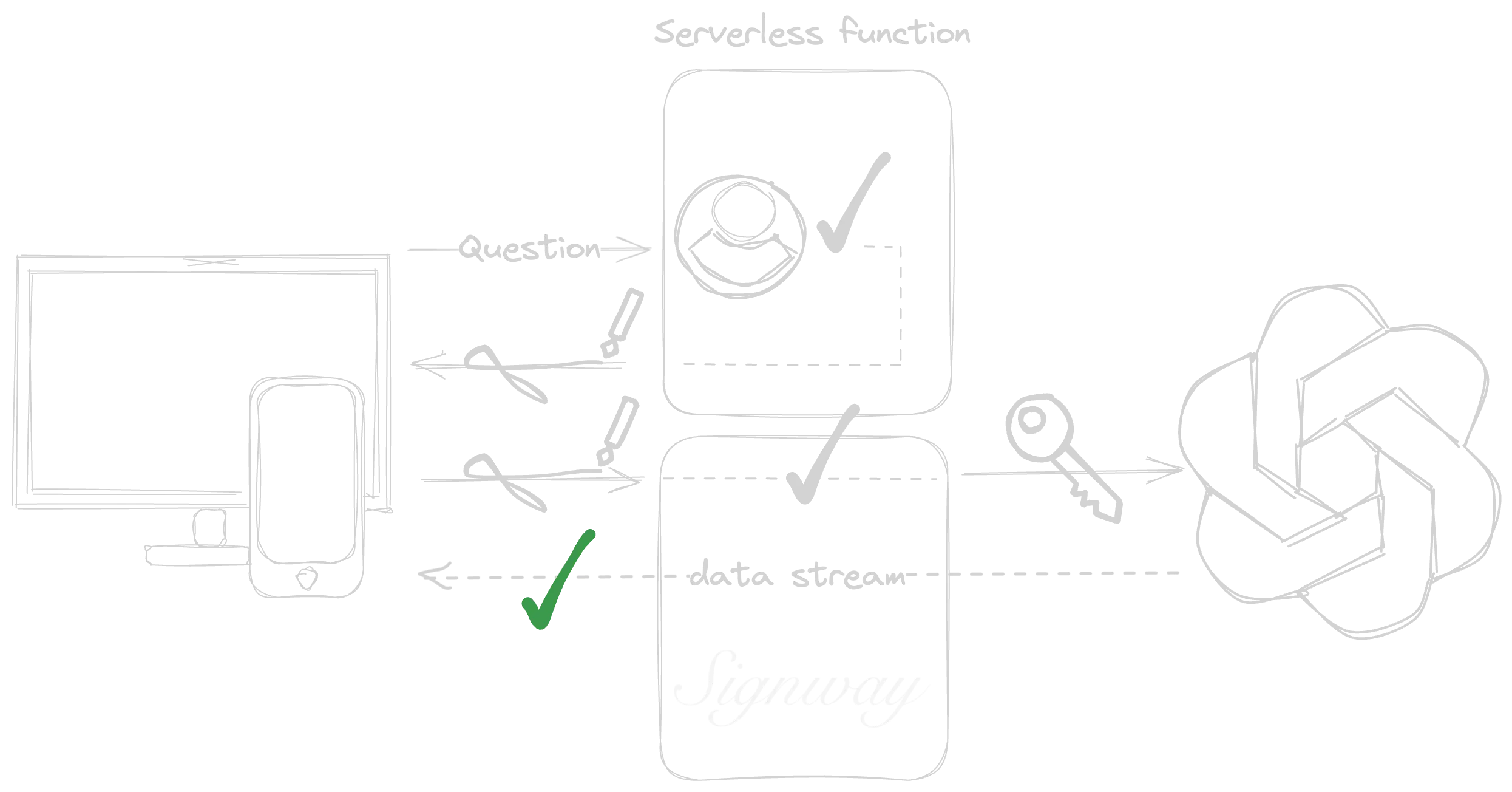

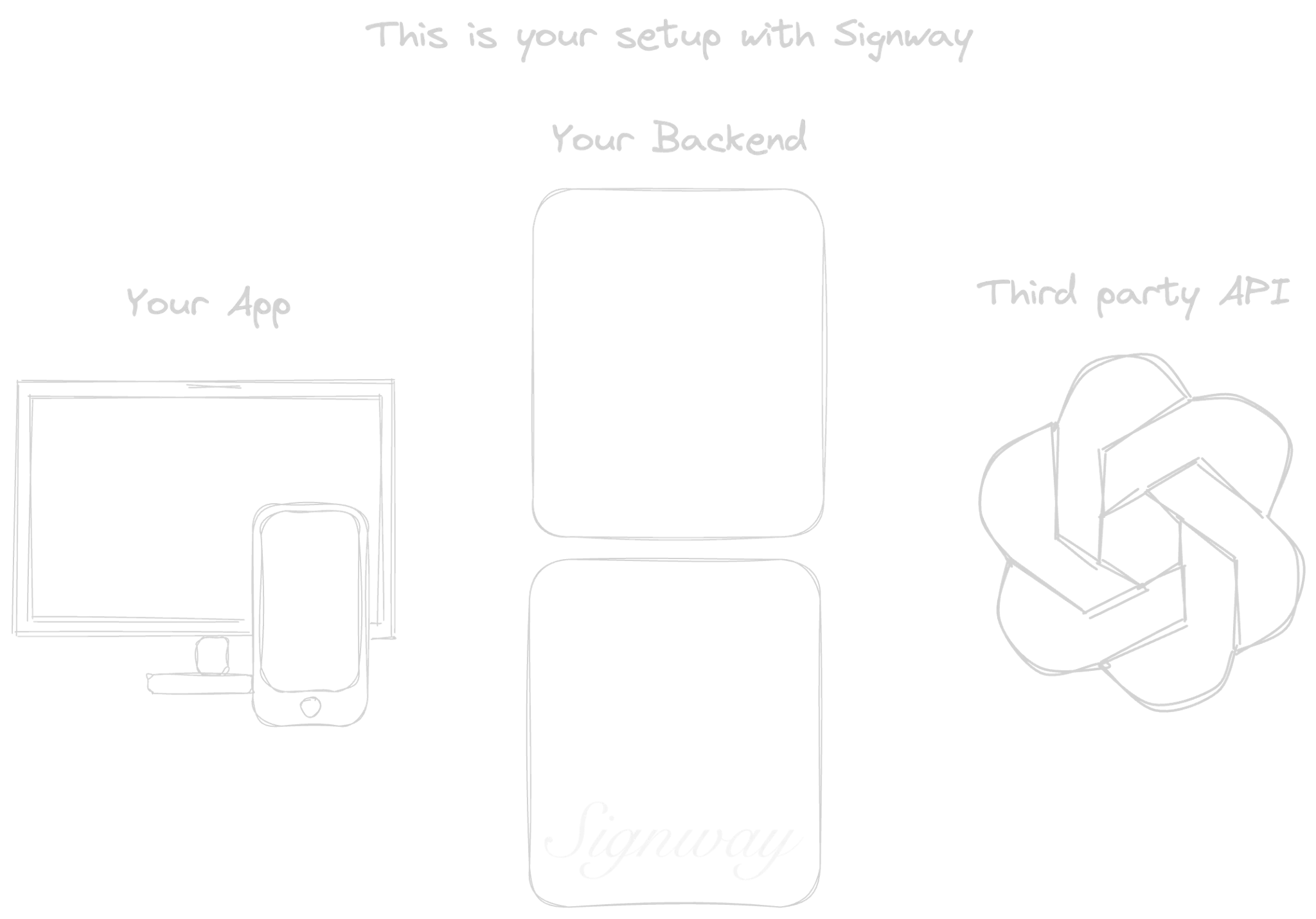

- Signway revolutionizes the way your applications handle data streaming. It offers a unique solution by creating short-lived, secure URLs for direct API requests, bypassing common streaming bottlenecks.

- With Signway, the entire data streaming process becomes more efficient. Users enjoy faster, more secure access to data, while your systems benefit from reduced load and complexity.

How does it work?

1/6

1/10

Pay as you go model

On demand

Fully managed

1000 requests/second

100 Mb/request data transfer

10 Applications

0.009 $/Mb transferred